RESEARCH

Introduction

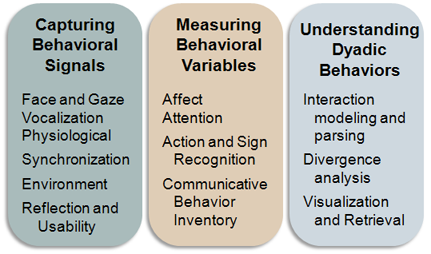

We will develop Behavior Imaging technologies that provide multi-modal sensing and modeling capabilities for the automated capture, measurement, analysis, and visualization of human behaviors. Just as 20th century medical imaging technologies revolutionized internal medicine, so too will behavior imaging technologies usher in a new era of quantitative understanding of behavior. Our research activities are organized into three research thrusts:

These thrusts describe the systematic progression of our proposed work, from the synchronized capture of multimodal data, such as video and audio, to the measurement of behavioral variables such as gaze and arousal, culminating in the integrated understanding of complex dyadic behaviors, such as a toddler's performance in a joint attention task with a clinician, that indicates their risk for a variety of socio-emotional disorders.

Capturing Behavioral Signals

Video, audio, and wearable sensor data must be captured and analyzed to extract behavioral signals, such as gaze information, hand motion, segmented speech, and physiological responding. Captured signals must be synchronized, and sensor placement must be appropriate for the setting, whether a clinic or a daycare. In order to maximize usability and respect privacy concerns, information about signal capture must be reflected back to non-experts in a clear and useful manner.

Measuring Behavioral Variables

Given a collection of behavioral signals, such as hand and face movements, speech utterances, and so forth, a key challenge is to extract measurements of the behavioral variables that are needed to understand complex behaviors. For example, to infer where a child is attending to at a given moment requires the analysis of multiple behavioral signals, including gaze and pointing, within the overall context of an on-going interaction. A key challenge is the integration of physiological measurements, such as GSR, with measurements of expressed behavior in the analysis of affect and attention.

Understanding Dyadic Behaviors

Given measurements of behavioral variables over time, the remaining challenge is to identify, analyze, and quantify complex behavioral interactions between a child and another actor. Joint attention, for example, requires analysis of the attentional state of both actors over time, along with the interpretation of actions (such as pointing at a ball) and vocalizations. We are interested in both recognizing behaviors when they occur and measuring relevant parameters of the interaction, such as a child's delay in shifting gaze between a toy and a social partner's face (joint attention).

Autism: A Motivating Research Domain

Autism and related developmental delays are a specific focus of this project. They constitute a concrete, actively-studied, and socially relevant domain in which to develop BI technologies. Two specific application contexts will integrate our research activities:

- Rapid-ABC Screener is a behavioral screening instrument for early detection of risk for Autism and related developmental disabilities based on a scripted sequence of interactions between a clinician and child.

- A technical paper describing this screener, written by its creators, Opal Ousley, Rosa Arriaga, Michael Morrier, Jennifer Mathys, Monica Allen, and Gregory Abowd, can be accessed here.

- Behavior shaping is a widely-used therapy for behavioral and communicative skills in which selective reinforcement of desired behaviors is used to guide the child's development.