The Secrets of Salient Object Segmentation

The Two Worlds of Saliency

Fixation Prediction

To compute a probabilistic map of an image to predict the actual human eye gaze patterns

State-of-the-art

- ITTI [Itti et al. PAMI 98]

- AIM [Bruce et al. NIPS 06]

- GBVS [Harel et al. NIPS 07]

- DVA [Hou et al. NIPS 08]

- SUN [Zhang et al. JOV 08]

- SIG [Hou et al. PAMI 12]

Salient Object Segmentation

To generate masks that matches the annotated silhouettes of salient objects

State-of-the-art

- FT [Achanta et al. CVPR 09]

- GC [Cheng et al. CVPR 11]

- SF [Perazzi et al. CVPR 12]

- PCA-S [Margolin et al. CVPR 13]

- And more ...

The PASCAL-S Dataset

Bridge the gap between fixations and salient objects

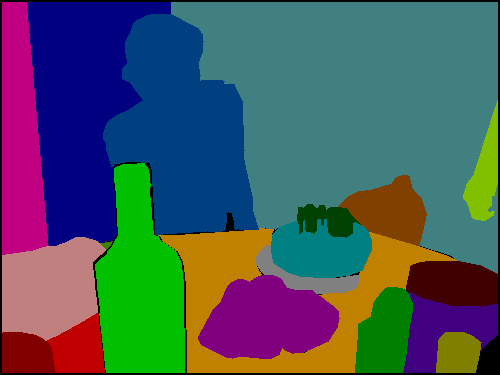

Original Image

Images from the validation set of PASCAL VOC 2010

Full Segmentation

Each image is manually segmented for salient object annotation

Eye Fixations

Eye tracking from multiple subjects during a two-second free viewing

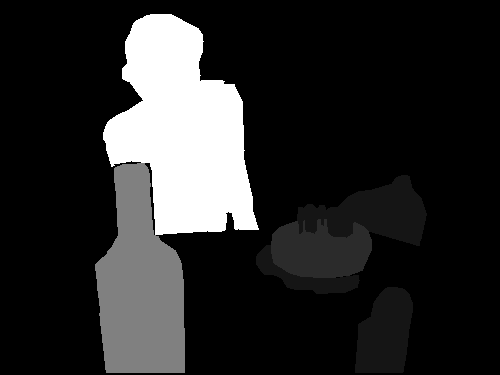

Salient Object Masks

Salient objects selected by multiple subjects using the segmentations

Images from PASCAL 2010

Object Instances

Subjects

Benchmarks

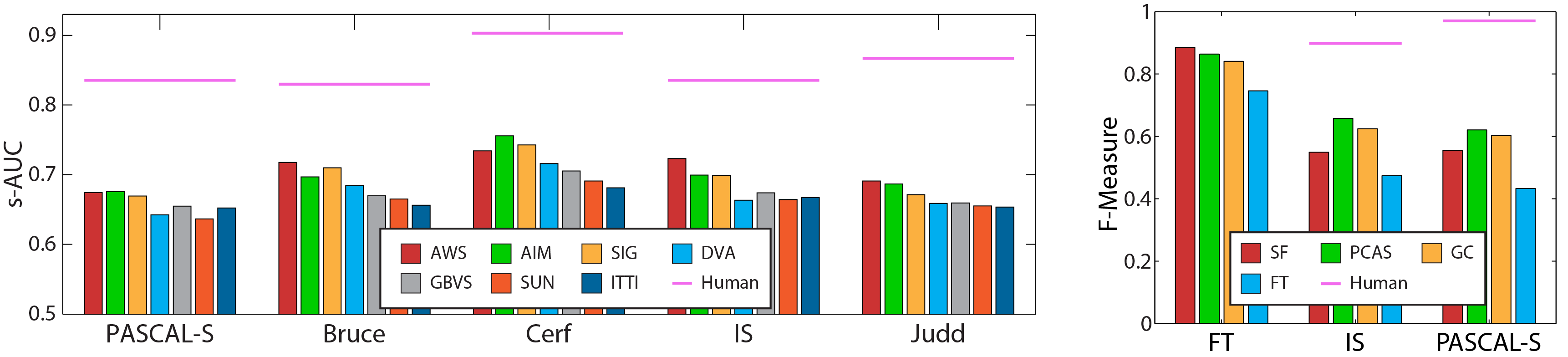

Left: Fixation Prediction results on PASCAL-S, BRUCE [2], Cerf [9], IS [10] and Judd [11] with 7 different algorithms and human consistency. Algorithms include AWS [14], AIM [2], SIG [5], DVA [6], GBVS [4], SUN [13] and ITTI [7].

Right Salient object segmentation results on FT [1], IS [10] and PASCAL-S with 4 different algorithms and human consistency. Algorithms include SF [9], PCAS [12], GC [3] and FT [1].

Our Findings

Our Method

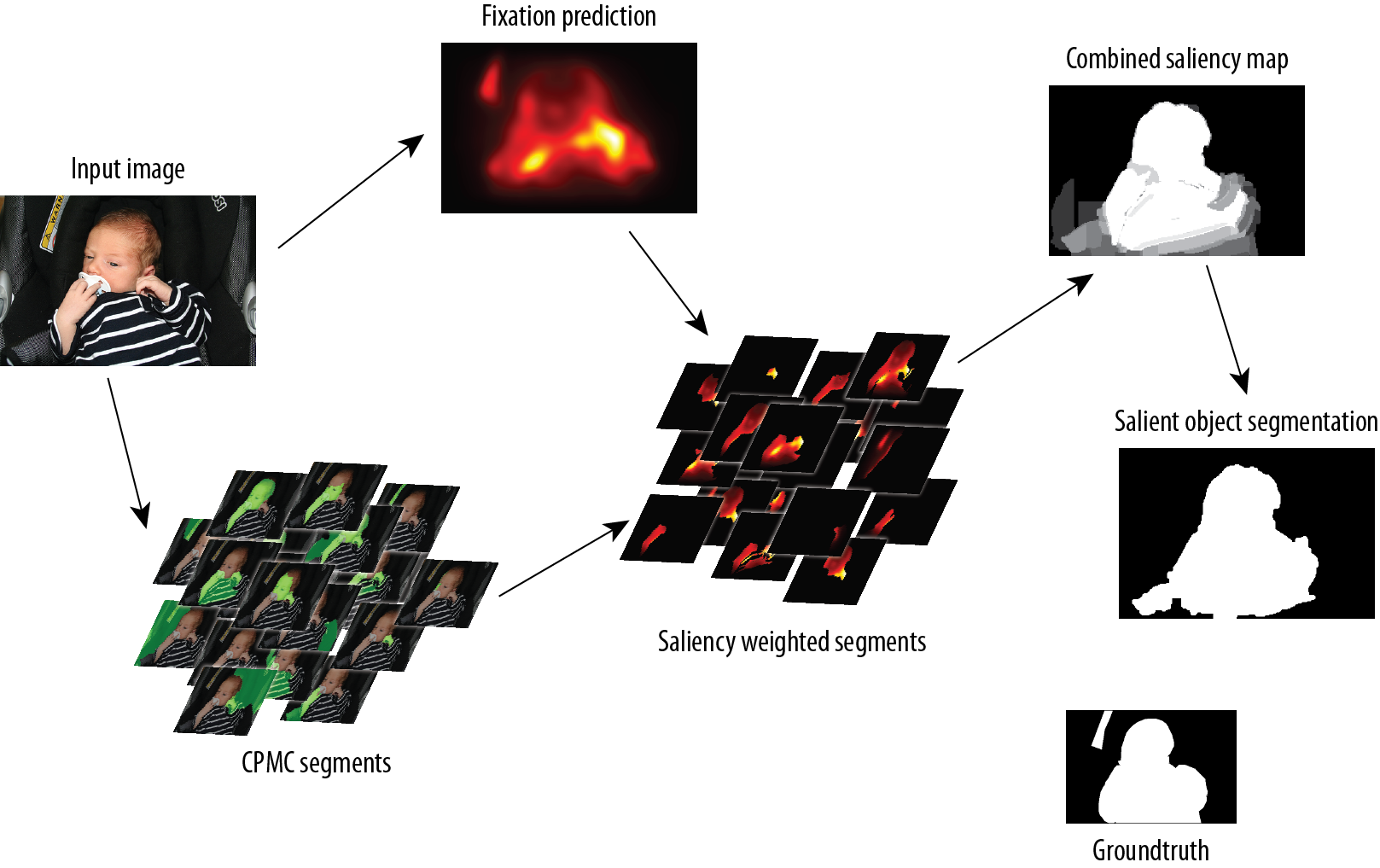

We propose a salient object segmentation model by combining object proposal and fixation prediction

Our core idea is to first generate a set of object candidates, and then use the fixation algorithms to rank different regions based on their saliency.

Results

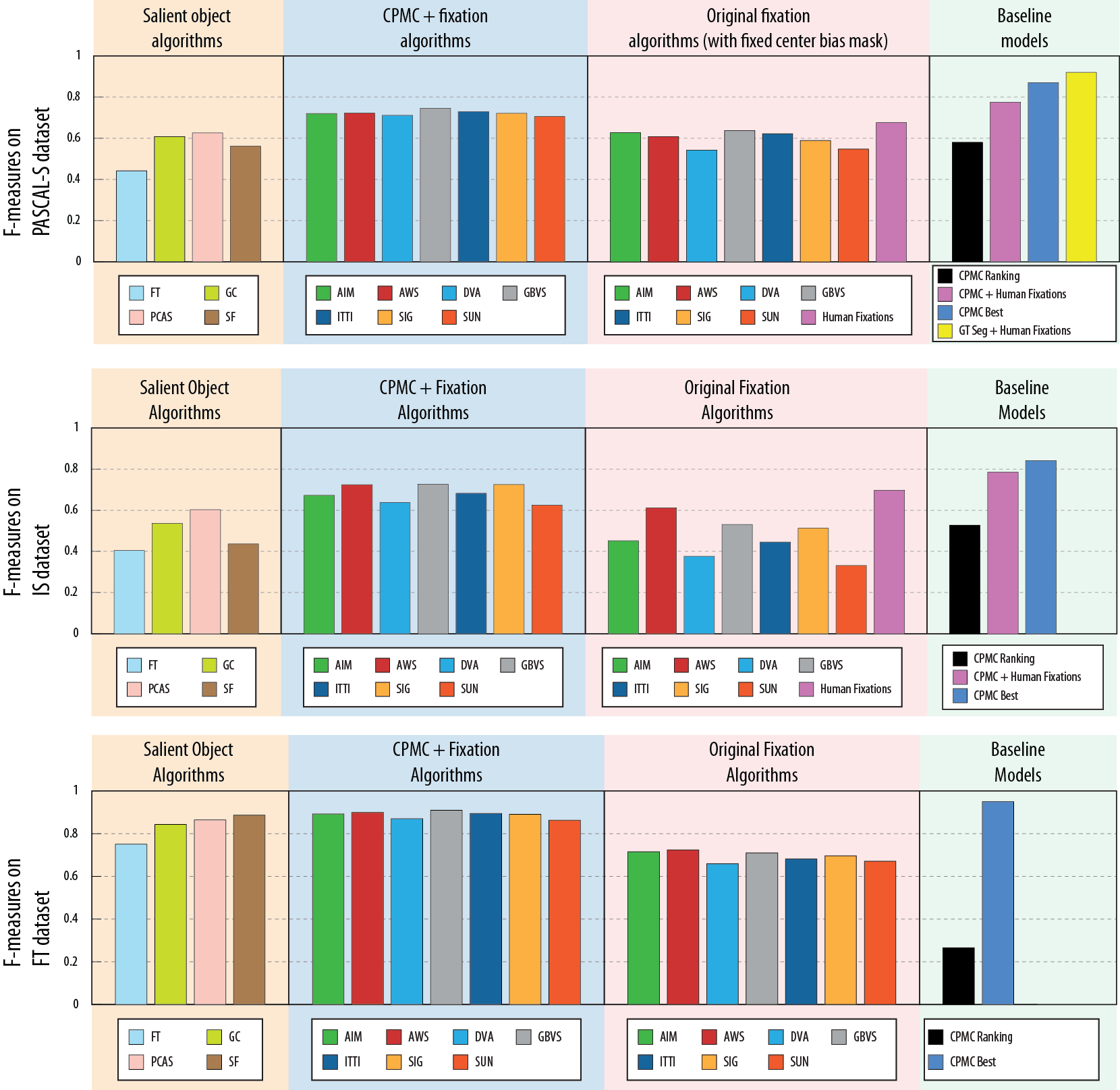

The F-measures of all algorithms on PASCAL-S, IS [10] and FT [1] dataset. All CPMC+Fixation results are obtained using top K = 20 segments. Our method with GBVS [4] outperformed state-of-the-art methods on salient object segmentation.

Reference

- R. Achanta, S. Hemami, F. Estrada, and S. Susstrunk. Frequency-tuned salient region detection. CVPR 2009

- N. Bruce and J. Tsotsos. Saliency based on information maximization. NIPS 2005

- M.-M. Cheng, G.-X. Zhang, N. J. Mitra, X. Huang, and S.-M. Hu. Global contrast based salient region detection. CVPR 2011

- J. Harel, C. Koch, and P. Perona. Graph-based visual saliency. NIPS 2006

- X. Hou, J. Harel, and C. Koch. Image signature: Highlighting sparse salient regions. TPAMI 2012

- X. Hou and L. Zhang. Dynamic visual attention: Searching for coding length increments. NIPS 2008

- L. Itti, C. Koch, and E. Niebur. A model of saliency-based visual attention for rapid scene analysis. TPAMI 1998

- F. Perazzi, P. Krahenbuhl, Y. Pritch, and A. Hornung. Saliency filters: Contrast based filtering for salient region detection. CVPR 2012

- M. Cerf, J. Harel, W. Einhauser, and C. Koch. Predicting human gaze using low-level saliency combined with face detection. NIPS 2008

- T. Judd, K. Ehinger, F. Durand, and A. Torralba. Learning to predict where humans look. ICCV 2009

- J. Li, M. D. Levine, X. An, X. Xu, and H. He. Visual saliency based on scale-space analysis in the frequency domain. TPAMI 2013

- R. Margolin, A. Tal, and L. Zelnik-Manor. What makes a patch distinct? CVPR 2013

- L. Zhang, M. H. Tong, T. K. Marks, H. Shan, and G. W. Cottrell. Sun: A bayesian framework for saliency using natural statistics. Journal of Vision 2012

- A. Garcia-Diaz, V. Leboran, X. R. Fdez-Vidal, and X. M. Pardo. On the relationship between optical variability, visual saliency, and eye fixations: A computational approach. Journal of Vision 2008